In 2011, living in a city with more dented cars than I'd ever seen in my life, I wanted traffic data for a story I was working on, so I wrote my first real web scraper. I still remember it pretty well: it was written in PHP (barf), ran every five minutes, grabbed the current collisions police were responding to across the city and dumped them into a database. Immediately after writing a story based on the data, I threw the scraper on GitHub and never touched it again (RIP). It got me wondering how many journalists had rewritten and shared scrapers for the exact same sites and never maintained them. I pictured a mountain of useless, time consuming code floating around in cyberspace. Journalists write too many scrapers and we're generally terrible at maintaining them.

There are plenty of reasons why we don't maintain our scrapers. In 2013 I built a web tool to help journalists do background research on political candidates. By entering a name into a search form, the tool would scrape up to fifteen local government sites containing public information. It didn't take long to figure out maintaining this tool was a full time job. The tiniest change on one of the sites would break the whole thing. Fixing it was a tedious process that went something like this: read the logs to get an idea of which website broke the system, visit the website in my web browser, manually test the scrape process, fix the broken code and re-deploy the web application.

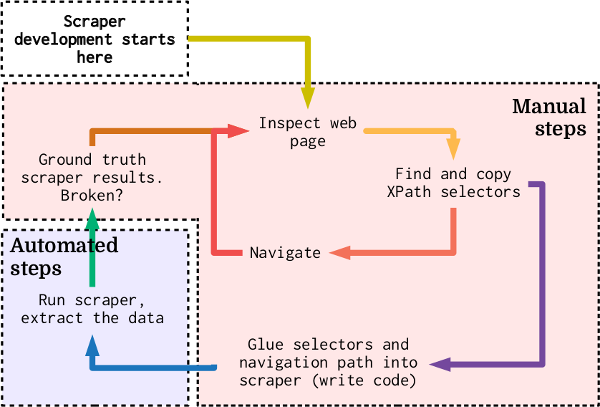

A flowchart of the typical web scraper development process. Development starts at the top left and continues with manual steps: analyzing the website, reading the source code, extracting XPaths and pasting them into code. The single automated step consists of running the scraper. Because scrapers built this way are prone to breaking or extracting subtly incorrect data, the output needs to be checked after every scrape. This increases the overall maintenance requirements of operating a web scraper.

Leap forward to 2018—I was running recurring, scheduled scrapes on open government sites for several media organizations—and I was still building my scrapers the old fashioned way. The fundamental problem with these scrapers was the way they identified web page elements to interact with or extract from: XPath selectors.

To understand XPath, you need to understand that browsers "see" webpages as a hierarchical tree of elements, known as the Document Object Model (DOM)[3], starting from the topmost element. Here's an example:

Example webpage DOM

html

|

---------------

| |

head body

| |

-------- ----------

| | | | |

title style h1 div footer

|

button

XPath is a language for identifying parts of a webpage by tracing the path from the top of the DOM to the specified elements. For example, to identify the button in our example DOM above, we'd start at the top html tag and work down to the button, which gives us the following XPath selector:

/html/body/div/button

As you can see, the XPath selector leading to the button depends on the elements above it. So, if a web developer decides to change the layout and switches the div to something else, then our XPath—and our scraper—is broken.

I wanted a tool that would let me describe a scrape using a set of standardized options. After partnering with a few journalism organizations, I came up with three common investigative scrape patterns:

- Crawl a site and download documents (e.g., download all PDFs on a site).

- Query and grab a single result page (e.g., enter a package tracking number and get a page saying where it is).

- Query and save all result pages (e.g., a Google search with many results pages).

The result of this work is AutoScrape, a resilient web scraper that can perform these kinds of scrapes and survive a variety of site changes. Using a set of basic configuration options, most common journalistic scraping problems can be solved. It operates on three main principles in order to reduce the amount of maintenance required when running scrapes:

Navigate like people do.

When people use websites they look for visual cues. These are generally standardized and rarely change. For example, search forms typically have a "Search" or "Submit" button. AutoScrape takes advantage of this. Instead of collecting DOM element selectors, users only need to provide the text of pages, buttons and forms to interact with while crawling a site.

Extraction as a separate step.

Web scrapers spend most of their time interacting with sites, submitting forms and following links. Piling data extraction onto that process increases the risk of having the scraper break. For this reason, AutoScrape separates site navigation and data extraction into two separate tasks. While AutoScrape is crawling and querying a site, it saves the rendered HTML for each page visited.

Protect extraction from page changes as much as possible.

Extracting data from HTML pages typically involves taking XPaths, extracting data into columns, converting these columns of data into record rows and exporting them. In AutoScrape, we avoid this entirely by using an existing domain-specific template language for extracting JSON from HTML called Hext. Hext templates, as they're called, look a lot like HTML but include syntax for data extraction. To ease the construction of these templates, the AutoScrape system includes a Hext template builder. Users load one of the scraped pages containing data and, for a single record, click on and label each of the values in it. Once this is done, the annotated HTML of the selected record can be converted into a Hext template. JSON data extraction is then a matter of bulk processing the scraped pages with the Hext tool and template.

An example extraction of structured data from a source HTML document (left), using a simple Hext template (center) and the resulting JSON (right). If the HTML source document contained more records in place of the comment, the Hext template would have extracted them as additional JSON objects.

Hext templates are superior to the traditional XPath method of data extraction on pages that contain few class names or IDs, as is common on primitive government sites. While an XPath to an element can be broken by changes made to ancestor elements, breaking a Hext template requires changing the actual chunk of HTML that contains data.

Using AutoScrape

Here are a few examples of what various scrape configurations look like using AutoScrape. In all of these cases, we're just going to use the command line version of the tool: scrape.py.

Let's say you want to crawl an entire website, saving all HTML and style sheets (no screenshots):

./scrape.py \

--maxdepth -1 \

--output crawled_site \

'https://some.page/to-crawl'

In the above case, we've set the scraper to crawl infinitely deep into the site. But if you want to only archive a single webpage, grabbing both the code and a full length screenshot (PNG) for future reference, you could do this:

./scrape.py \

--full-page-screenshots \

--load-images \

--maxdepth 0 \

--save-screenshots \

--driver Firefox \

--output archived_webpage \

'https://some.page/to-archive'

Finally, we have the real magic: interactively querying web search portals. In this example, we want AutoScrape to do a few things: load a webpage, look for a search form containing the text "SEARCH HERE", select a date (January 20, 1992) from a date picker, enter "Brandon" into an input box and then click "NEXT ->" buttons on the result pages. Such a scrape is described like this:

./scrape.py \

--output search_query_data \

--form-match "SEARCH HERE" \

--input "i:0:Brandon,d:1:1992-01-20" \

--next-match "Next ->" \

'https://some.page/search?s=newquery'

All of these commands create a folder that contains the HTML pages encountered during the scrape. The pages are categorized by the general type of page they are: pages viewed when crawling, search form pages, search result pages and downloads.

autoscrape-data/

├── crawl_pages/

├── data_pages/

├── downloads/

└── search_pages/

Extracting data from these HTML pages is a matter of building a Hext template and then running the extraction tool. Hext templates can either be written from scratch or by using the Hext builder web tool included with AutoScrape. This is best illustrated in the quickstart video.

Currently, I'm working with the Computational Journalism Workbench team to integrate AutoScrape into their web platform so that you won't need to use the command line at all. Until that happens, you can go to the GitHub repo to learn how to set up a graphical version of AutoScrape.

Fully Automated Scrapers and the Future

In addition to building a simple, straightforward tool for scraping websites, I had a secondary, more lofty motive for creating AutoScrape: using it as a testbed for fully automated scrapers.

Ultimately, I want to be able to hand AutoScrape a URL and have it automatically figure out what to do. Search forms contain clues about how to use them in both their source code and displayed text. This information can likely be used to by a machine learning[10] model to figure out how to search a form, opening up the possibility for fully-automated scrapers.

If you're interested in improving web scraping, or just want to chat, feel free to reach out. I'm in this for the long haul. So stay tuned.